At the end of last week, Softwire opened its doors to the public for an evening of Lightning Talks with a data engineering flavour. Take a look at the event page for speaker bios and talk details.

For those of you who are new to the idea of Lightning Talks, this is a talk format we love at Softwire where we challenge speakers to squeeze the useful content from much longer talks into just six minutes. A giant timer facing the audience ticks down from the speaker’s first word, after the audience counts them in. When the timer gets to zero an obnoxious klaxon noise cuts the speaker off with absolutely zero mercy.

The point of all of this is focused, light-hearted education and networking goodness without having to sit through hours of lectures to get it. It’s also great for speakers to be able to share their passions without having to spend days preparing. The emphasis is on entertainment rather than polished PowerPoint slides, meaning there are cool points awarded for finishing with seconds to spare, as well as for bad clip art, dodgy fonts and terrible jokes. And there were lots of these this time…heaven!

And all over by 20:30, so perfect for a school night, with free pizza and an hour for networking: what’s not to love?!

Fancy hearing what the talks were about this time? Okay, three, two, one…GO!

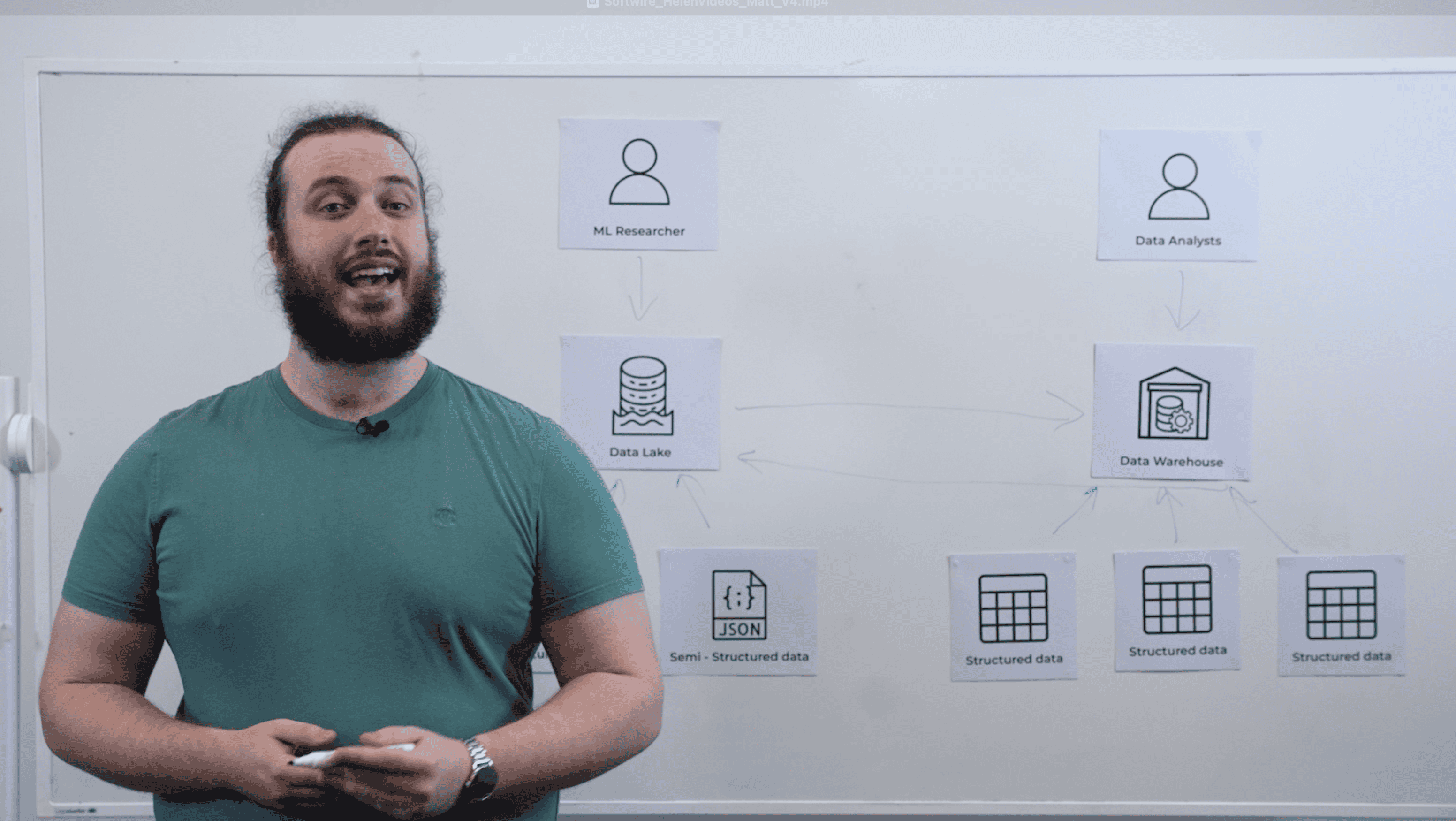

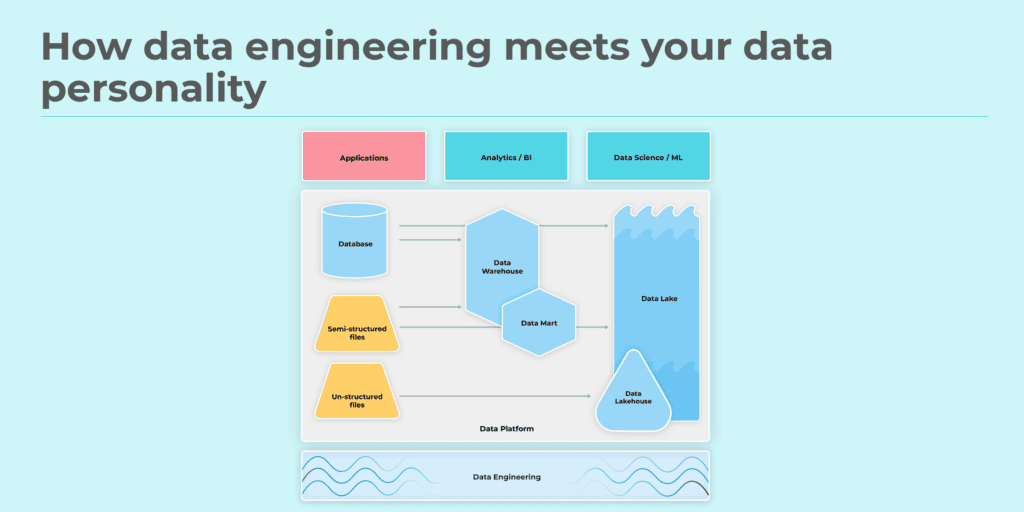

First up, Laura Hughes’ Data Personality Quiz (which type are you?) gave us a tour of the different enterprise uses of data and the data engineering that supports them. There were some Data Gatherers in the audience, plenty of Data Analysts, and even a handful of Data Scientists keen and hungry to dive into the deep blue data lake. Laura recently wrote an article demystifying various data terms – do check it out; it’s really rather good.

Alice Selway-Clarke covered the practicalities of building data pipelines capable of processing hundreds of terabytes of imaging data – here, pictures of eyes – enabling ground-breaking research into the detection of Alzheimer’s and Parkinson’s. She explained how her team overcame practical difficulties in extracting, linking and anonymising the source data, and which tools were (and which weren’t) useful for doing so.

And all this while a jaunty, periwinkle-blue WordArt “oh no” on the side of the slides grew increasingly large as the scale of the original problem became apparent.

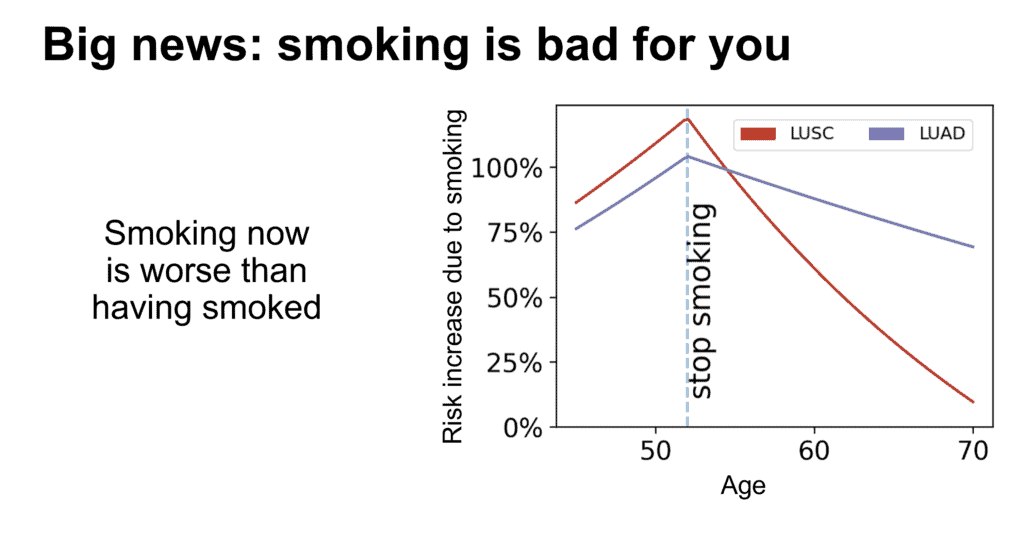

Hugh Selway-Clarke, a PhD student in UCL’s Department of Respiratory Medicine, gave a fascinating talk on the mechanistic modelling techniques he’s used in his research into how the risk of lung cancer decreases following smoking cessation. He explained how he used synthetic data with Approximate Bayesian computation and sequential Monte Carlo methods to extract valid insights from just 16 patients, testing assumptions about the changing proportion of healthy and smoke-damaged cells.

Matt Barnfield then opened the doors of Restaurant Barnfield, giving us a light-hearted tour of how to get as little value as possible from your data. His menu featured Perplexing Pasta, the Sauce of Obfuscation, and a choice between Hand-Rolled Garlic Bread and Cool Tech Salad for a side dish. He covered topics such as how to ensure your data is kept in silos, how to force analysts to repeat the same manual processes, how to ensure your reports are flaky and unauditable, and how to choose data tooling to minimise flexibility while making sure to court dependency hell.

Patrick Beldon, founder and CEO of Cortirio, talked about his medical device which uses complex optical modelling techniques to infer from a quick and harmless infrared scan of the brain the likelihood that a patient has had a stroke, all inside a self-contained headband which could in future be carried in an ambulance. He talked about the risks of “just throwing AI at everything” for medical devices (hint: regulators regulate these for a reason), but also the opportunities he foresees in the future for the properly regulated use of AI in devices like his.

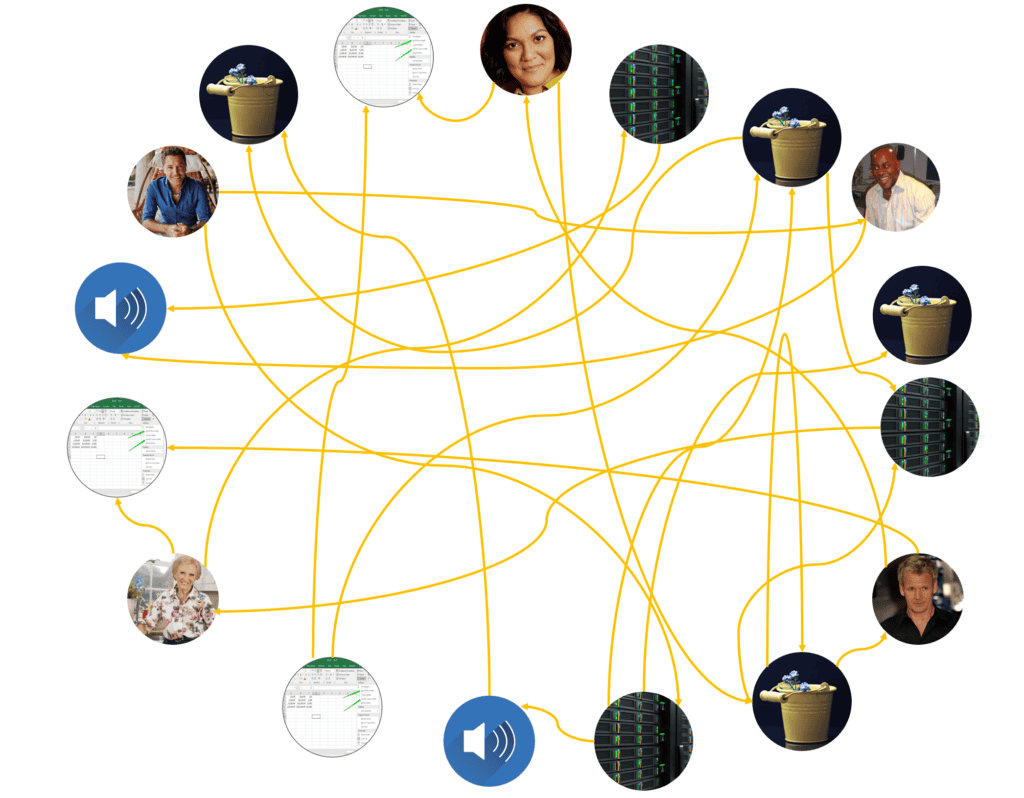

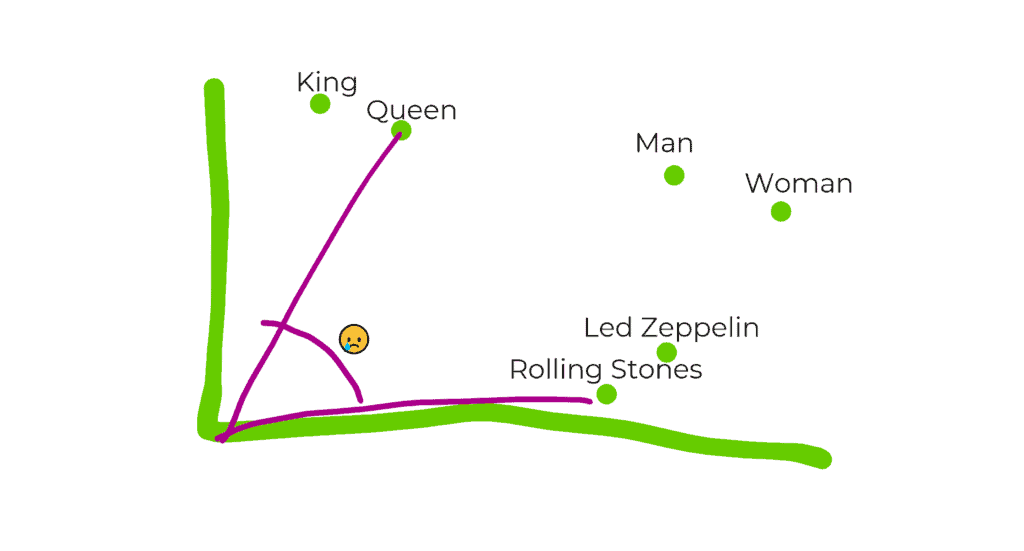

Finally, Anthony Pengelly’s pleasingly unhinged talk on vector databases started with a lay-person’s introduction to word embeddings and their use in semantic comparisons of words and phrases, before discussing how such embeddings are stored in vector databases (how many dimensions is too many? does using 3072 mean we’re now in hyperspace?), and finally the practical uses of these in improving and stabilising LLM outputs for GenAI applications. On the way, he explained how “king” minus “man” plus “woman” could equal “queen”, but not “Queen”, and lamented about how to get a Rolling Stone.

Sound like your cup of tea? Then keep an eye on our LinkedIn page to make sure you’re the first to hear about our upcoming events!