During the summer of 2024, I led a team of brand-new developers on their first project. They all had experience writing code, but none had prior experience with professional software development.

We were embarking on a nine-week pro bono project to finish a web app for Norwood and Brixton Foodbank, a charity in South London.

While my main goals for the summer were to deliver the project and to train the developers, I had an extra task. I’d been asked to see what the team could achieve using AI assistance. If we used every tool and technique we could find, would we work faster and produce better software? If so, by how much?

A practical investigation was important to us, because looking at the different providers’ websites bombards you with percentage after percentage telling you how great their products are. GitHub Copilot promises 55% faster coding, and so on.

We weren’t convinced that a real team would see those benefits. Those numbers are lazy marketing, intended to build the hype about AI. We needed to cut through those claims and establish the most useful way to use AI ourselves.

Takeaway 1: try out tools as teams, not individuals

Before this project, people at Softwire had already tried out numerous AI tools. I’ve also spoken to dozens of people across the industry about their experiences adopting AI, and it’s always the same story.

A few developers get very excited about how shiny and promising AI tools are. They try some out and share their experiences with each other, but the organisation as a whole continues to not buy into the tools.

I think there are two main problems with the ad hoc approach.

The first problem is that these tools yield greater results when everyone is using them. Some, such as code review tools, don’t even make sense outside of the context of a team, but even the tools that can be used by an individual take considerable skill to use well. When a team consistently uses the same tools, they’ll become experts in those tools much faster.

The second problem is that the people trying the tools and sharing opinions tend to be the more senior engineers. However, these engineers aren’t necessarily the ones who stand to gain the most from using these tools, so we need feedback from across the range of experience levels.

Hence our investigation: putting the experience of junior developers at the forefront and seeing what AI tools mean for a team.

Takeaway 2: categorise your options to narrow them down

At this point we just needed to pick the tools, but that presented a problem.

There are dozens and dozens of AI software development tools out there, many times more than we could hope to try during our nine weeks.

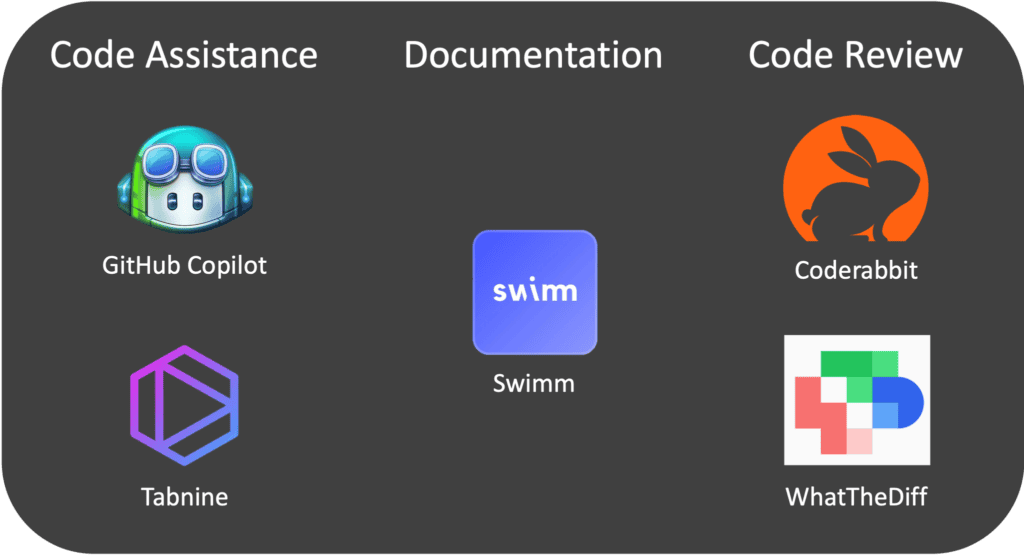

To create a manageable list of tools to try, we started by categorising them by use case. We then picked one or two tools per category based on our colleagues’ experiences, as well as non-functional requirements such as security.

The provider’s data security policy is one of the most helpful criteria to narrow down your options. It used to be unclear how these companies used your data. Nowadays, whether you need SOC2 compliance, the ability to self-host or even stricter controls, someone will provide that for you.

After we’d gone through this categorisation process, this was our list of tools:

Some were good, some were brilliant, and some didn’t help us as much as we’d hoped, but I’m not going to dwell on the particulars of the tools. Instead, here are a few key things we learned.

Takeaway 3: thinking small can be really useful

It’s often claimed that AI is going to change everything almost overnight. Personally, I don’t believe this. Industries adopt new technology incrementally; companies should do the same and treat adopting new tech as a part of daily business. The promise of AI tooling is no exception.

There’s an incredible amount to be gained from small tweaks to your workflow, not least because small alterations are easier for your teams to adopt.

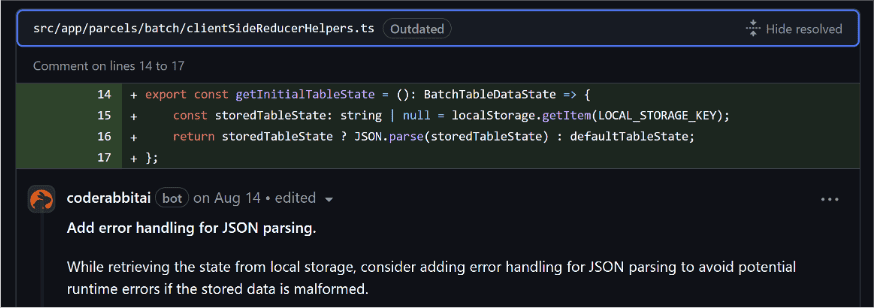

The best example of this from our project was Coderabbit. Coderabbit integrates with your git provider and automatically reviews pull requests.

The first thing we loved about Coderabbit was that it’s unobtrusive. It doesn’t demand that we do anything, which is incredibly refreshing compared with some of the pushier AI tools.

The most opinionated Coderabbit gets is giving actionable comments like this:

I would have noticed this sort of thing when I reviewed the code, but that’s exactly why Coderabbit is useful. It filters small problems to let me focus more on teaching higher-level code structure.

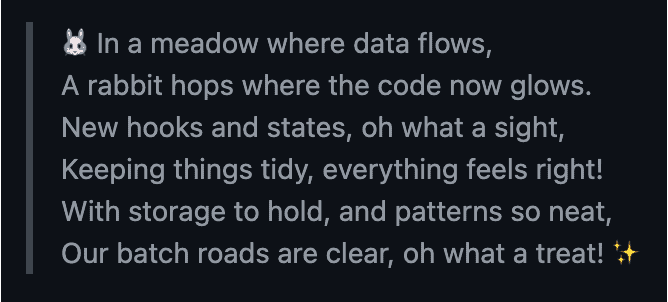

As a nice touch, Coderabbit also writes a rabbit-themed poem based on each pull request. This little bit of silliness meant we looked forward to seeing what it would come up with, rather than resenting a tool for butting in on our work.

I needed help with code reviews sometimes, because I can let you in on a little secret…

Takeaway 4: AI tools make developers faster, not better

If you look more closely at the percentage claims from providers (“Amazon Q provides 25% faster initial development”), you’ll notice that all of them boast about increases in speed or productivity. None of them talk about writing better code.

We all know that being a good software developer is about more than just writing code that works. High-quality code comes from our experience and understanding of frameworks and best-practice principles.

AI couldn’t help my developers with that. They didn’t know what questions to ask it, and they wouldn’t have understood the answers it provided.

AI helped these junior developers write code of the same quality that I’d usually expect – still high, they were awesome developers – but their questions about patterns and ideas still came to me, rather than to the AI.

I loved this. It meant that I could spend my time teaching the team about the interesting bits: about how to be developers, not just how to code, which was incredibly liberating.

Takeaway 5: focus hard on core quality

The two code assistants we tried in anger – GitHub Copilot and Tabnine – are very similar on the surface, which makes them interesting to compare.

GitHub Copilot has the largest userbase of any AI assistant and is in many ways the baseline to compare other tools against.

Tabnine is a bit of an outlier. It uses proprietary models, but its focus is on security. You can host it wherever you want, and you can even air-gap it to protect your data.

This caught our attention, since Softwire undertakes a fair amount of confidential private-sector and government work where security is key.

That said, when we compared our experience of GitHub Copilot and Tabnine, Copilot won, hands down.

This was purely because Copilot suggested better code. The core quality was higher, so my developers were more likely to press tab and accept Copilot’s code suggestions.

I’m not discounting Tabnine just yet, though, because if I do want an assistant with gold-standard data security, it’s still the clear winner.

Takeaway 6: don’t worry about the magic percentage

You probably want to know how much the AI tools actually helped us. What percentage improvement did we see?

We didn’t measure. And I don’t think you should either.

We were definitely faster. If I had to guess I’d say 15-20%, but the exact number isn’t the point. Besides, the tools are evolving so quickly that in the time it takes to measure their impact that number will have changed.

If you do need to measure the impact of AI on your team’s productivity, don’t try to measure in the short term or in isolation. Think of the impact you’re looking for as being long-term, and qualitative as well as quantitative. In short, just start using AI tools, and the evidence that they’re working will come from your normal metrics for engineering team health. Above all, make sure you focus on your team’s experiences, because those hold much more information than a single number ever could.