LLMs (Large Language Models) are one of the most exciting new areas of tech, but, like me, you might have seen news articles rumouring the planet-destroying impact of AI. Headlines like ‘Google emissions jump nearly 50% over five years as AI use surges’ in the Financial Times, or The Guardian’s ‘The ugly truth behind ChatGPT: AI is guzzling resources at planet-eating rates’.

I’ve spent the best part of the last year working on generative AI projects at Softwire, so I wanted to know: what are the actual numbers involved here? Is it really a lot in the grand scheme of things? And what can we do better as engineers?

How does AI emit carbon?

The first thing I wanted to do was to find some concrete numbers. Frustratingly, there’s very little transparency here from the big LLM providers – most of the numbers floating around the internet have been calculated by third parties employing quite a bit of guesswork.

The most (and perhaps only) comprehensive source available so far is a paper on the carbon footprint of an LLM called BLOOM1. Although it’s not on the scale of the larger GPT models, it’s a useful starting point for understanding the emissions involved in an LLM’s lifecycle. I’m going to dive into some of the numbers here and break them down into a more digestible format.

Before we start, let me introduce you to the unit of measurement for carbon emissions: the carbon dioxide equivalent (CO2e). This expresses the impact of different greenhouse gas emissions on the climate in terms of the amount of carbon dioxide that would produce an equivalent effect. We’ll be using metric units of CO2e throughout.

1. Running costs

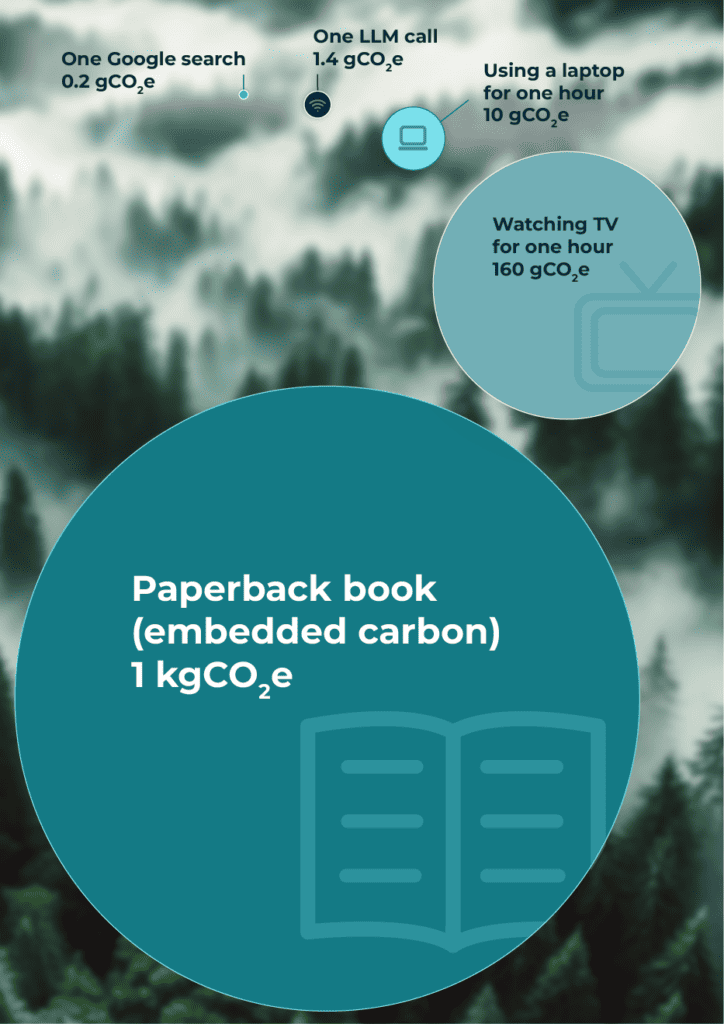

At first, you might think the only cost of an LLM is the electricity used to run the model – every time we send a request, some power is used to process it and send a response. But each individual call is tiny: the study showed BLOOM emitted an average of 1.41g of CO2e per call. Running at an average of 558 requests per hour, this totalled around 19kg of CO2e a day, the same as driving around 50 miles in a car. If the model had been deployed on a grid with a higher proportion of low-carbon energy, this could have been even lower.2

2. Training the model

Much more significant is the second source of LLM carbon emissions – training costs. This is a one-off cost when the model is created, and often takes months of training on hundreds of GPUs. BLOOM’s estimate here is made up of two parts.

Firstly, the carbon cost of the energy consumed by those GPUs is estimated at 25 tonnes of CO2e – you’d have to run BLOOM at normal operating capacity for three and a half years to reach the same emissions. To make matters worse, these figures can balloon for larger models – GPT-3 generated an estimated 550 tonnes of CO2e3 during training.

On top of this, there’s the electricity required to run the broader infrastructure that supports these AI processes – network, storage, cooling, and idle compute nodes. This accounts for another 14 tonnes of emissions associated with model training.

Again, these emissions come from electricity usage and heavily depend on how that electricity was generated. BLOOM was trained on a supercomputer in France, which has one of the lowest-carbon grids in the world – its emissions could have been over four times higher if it had been trained in the UK.

3. Embedded emissions

The most frequently overlooked source of LLM emissions is embedded carbon. This refers to the cost of manufacturing the computing hardware on which LLMs are trained and hosted – primarily GPUs and servers. Data from BLOOM estimates this at around 11 tonnes.

This means the up-front cost of creating BLOOM totals at least 50 tonnes of CO2e – 25 tonnes from running GPUs, 14 tonnes from running supporting infrastructure, and 11 tonnes from manufacturing hardware.

It’s not as bad as you think!

Before I panicked too much about these figures, I wanted to put them into perspective.

In my day-to-day, I use LLMs as a starting point for particularly thorny problems which I’d struggle to solve in a couple of Google searches. Figures from Google put one search at 0.2g of CO2e – equivalent to around one-seventh of a call to BLOOM. This suggests that replacing several Google searches with one LLM call doesn’t make a major difference to your digital footprint. On the other hand, it’s a waste of resources to ask an LLM when a simple search will suffice!

More complex tasks like image or text generation can be done far quicker with AI assistance, actually saving energy. So it isn’t enough to look at AI’s carbon emissions in isolation – it also really depends on what it’s being used to achieve.

A single LLM call emits 10 times less carbon than simply running your laptop for an hour, and 1,000 times less carbon than producing an average book!

On a larger scale, here are a few things whose emissions are roughly equivalent to the entire training cost of GPT-34:

- 115 average UK households over the course of a year5

- 200 average pet cats’ lifetimes6

- Manufacturing 30 medium-sized cars7

- 1 return flight from London to New York (total for all passengers)8

Put in this context, LLMs seem far from the horrific resource-munching villains that the headlines make out.

Still, what can we do about it?

Here are some ways to improve the efficiency of your LLM use as an engineer on large-scale projects.

1. Use small, specialised models

Larger LLMs are more expensive to call and run, but aren’t necessary for every task. Think about it this way – if you wanted to hammer a tiny nail into a plank of wood, you wouldn’t go out and buy a hydraulic press. In a similar way, we don’t have to rely on ChatGPT for all tasks. Instead, consider using SLMs (small language models), which can be a good fit for many use cases:

- Accessibility tools: SLMs have been used to improve speech-to-text tools.

- Sentiment analysis: SLMs can analyse text to determine whether a sentence is positive, negative or neutral.

- Real-time language translation: Apps such as travel aids can use SLMs to translate text based on context.

Platforms like Hugging Face provide enormous banks of SLMs trained for these specialised tasks. You can deploy these models or even self-host them if the model is small enough. Many of them are open source so they’re typically far cheaper than buying licenses for ChatGPT.

2. Choose transparent suppliers

Choose suppliers who are credible and transparent, and who have ambitious net zero policies verified by a trusted third-party like the Science-Based Targets Initiative.

For example, Softwire removes all the carbon we emit each year using durable carbon removals – meaning our activities contribute no net carbon emissions to our clients’ footprints.

Cloud suppliers are clearly a key factor in GenAI projects. Of the major providers, Microsoft aims to go carbon negative by 2030 and remove all carbon they’ve emitted since they were founded by 2050; Google are aiming for net zero by 2030. They both offer tools to measure the carbon footprint of your cloud usage.

3. Host your project in green data centres

If you have the freedom to choose where to host your project, pick a region which uses clean energy. An article from Climatiq suggests that data centres in Sweden are over 30 times more carbon efficient than those in London, and hundreds of times more than ones in Australia.

Face fears and make deliberate choices

Ultimately, although the carbon costs of LLMs are high, research shows they aren’t as scary as people often make out. Crunching the numbers really helped put my mind at ease – comparing the costs to other sources of emissions was a change of perspective, and a reminder not to overlook these everyday emissions. As well as this, the fact that we can pick more energy-efficient models and use greener suppliers left me optimistic about the future of AI and its climate cost in tech. However, as with most climate-based problems, it’s the big companies that are at the heart of the emissions and can have the biggest impact.

- Luccioni A., Viguier S., Ligozat A., Estimating the carbon footprint of BLOOM, a 176B parameter language model, JMLR Volume 24 (2023).

- BLOOM was running in the us-central1 region during this period, with a carbon intensity of 394 gCO2e/kWh. The UK’s average carbon intensity was about half that in 2022: 182 gCO2e/kWh.

- Patterson, et al., Carbon Emissions and Large Neural Network Training, arXiv:2104.10350 (2021).

- Ibid.

- Total household emissions of 133 million tCO2e in 2020 (source: ONS), with an estimated 27.8 million UK households that year (source: ONS).

- Martens, et al., The Ecological Paw Print of Companion Dogs and Cats, BioScience Volume 69 Issue 6 (2019).

- Mike Berners-Lee and Duncan Clark, What’s the Carbon Footprint of… A New Car?, The Guardian (2010).

- Rough figures from Carbon Independent show 59.6 tonnes of fuel are burned by a passenger airline travelling an equivalent distance, and emit 3.15 gCO2e per g fuel. Doubling for radiative forcing, and doubling again for a return flight gives 756 tCO2e, well over the estimated training cost of GPT-3.