Sean Williams, Founder and CEO at AutogenAI, recently gave a keynote speech at the Softwire Manchester office: How large language models are going to change the world. At this event, Sean covered what they are, the uses of them and demonstrated live how a large language model can respond to questions.

Here are the highlights and takeaways from his talk:

What is a large language model?

A large language model is a type of neural network that is specifically made for producing and analysing language. We are very used to computers being able to do complicated sums, but people won’t be used to computers being able to produce text and produce ideas. Large language models work by learning statistical associations between billions of words and phrases. It’s not just searching the internet: The AI is formulating new sentences and ideas, based on associations between words.

They’re charting new linguistic territory. If you use a plagiarism checker on the outcomes, you won’t find it anywhere, because isn’t plagiarising text from source – it is creating new ideas and new content.

How do large language models work?

The system is pre-trained on nearly the entire corpus of digitised human knowledge. This starts with the Common Crawl, which is everything written on the internet that you’d want trained into your large language mode. For example, AutogenAI’s language model is trained on 580 billion words from the internet. It has also read all of the digitised books in the world (26 billion words), all of the information on Wikipedia (94 billion words) and other web text (another 26 billion words). That’s over 700 billion words in total.

What are the costs and time requirements for a large language model?

It currently costs about $10 million to train a large language model and about a month in time, so we’re not talking years and decades to do this. The research and development costs for the most sophisticated models are unknown, but are likely, of course, to be much higher.

As this is a hot and interesting area, there’s a lot of interesting stuff happening in the open-source space on this. There’s also a lot of investment being made in growing this. For example, Microsoft recently invested a billion into open AI in in 2019.

What models are available now?

Google has got LAMDA. Microsoft (through OpenAI) has got GPT-3.

What are large language models currently being used for?

Translating text from one language to another

Computers can now do this almost as well as humans and orders of magnitude more efficiently. Google translate is already being surpassed.

Writing compelling, persuasive business prose quickly and cheaply

Businesses that write blogs, tenders, proposals or marketing copy can benefit from using modern NLP capabilities to produce high-quality content more quickly and more efficiently. No one would create a financial model without using Excel. In the very near future, no-one will write professional prose without using AI writing support.

Transcribing from human speech more quickly and more accurately than most human transcribers

Automating speech-to-text not only unlocks the potential for businesses to save money on expensive transcription services but it also makes possible the transcription of far more spoken content. For example, it is now possible and economical to capture and efficiently share everything that is said at all company meetings across the globe.

Understanding and categorising human language

This has applications across most businesses. For example, tech can now be used to understand customer reviews and social media posts. This can be used to identify trends in customer sentiment, spot opportunities for improvement, or simply to better understand how customers feel about a product or service.

Summarising long pieces of text

This was considered a very difficult problem in NLP but recent advances have catapulted computers forward. It is now possible for a computer to read a long document and produce a shorter summary that captures the main points.

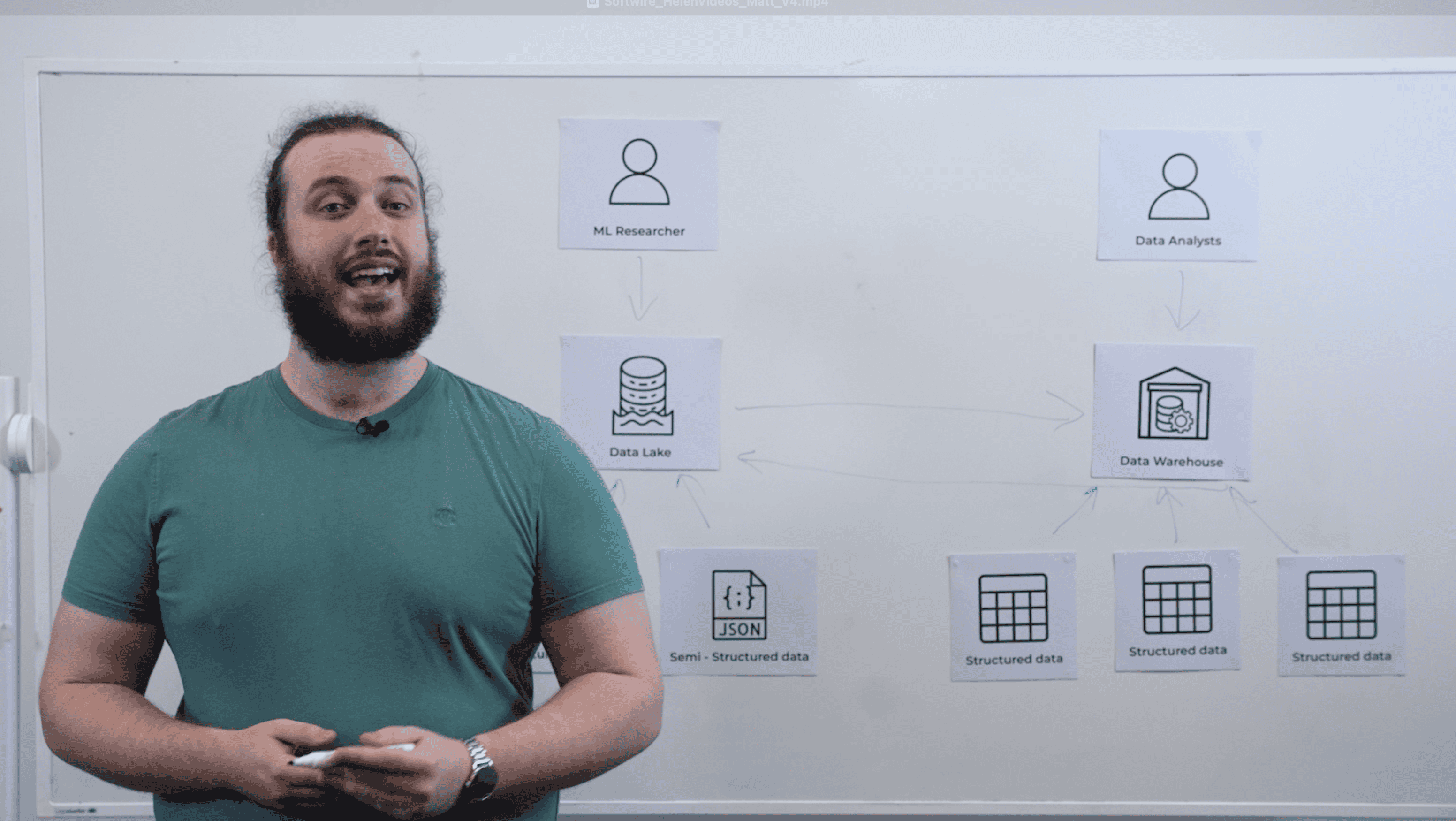

How is AutogenAI using this technology?

AutogenAI are using cutting-edge natural language processing solutions to help their customers to write bids, tenders and proposals.

AutogenAI’s business is about the commercial applications of this interesting technology. This includes writing compelling, persuasive business prose quickly and cheaply. It involves building user interfaces, user experience and prompt engineering on top of large language models.

This interfacing allows for heavy elements of editing afterwards. This doesn’t replace human writers. What it does do is help human writers get past the ‘blank piece of paper’ stage.

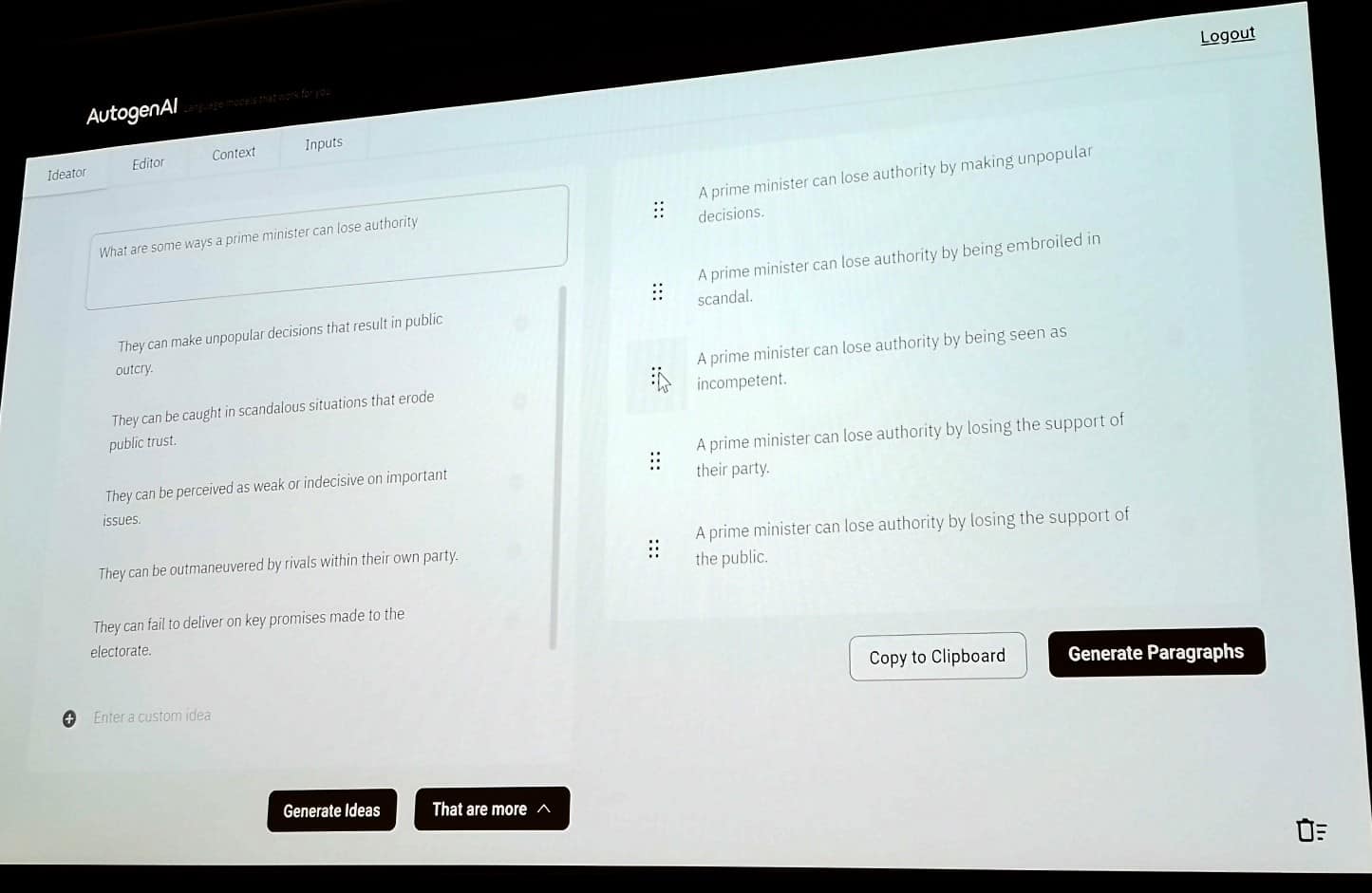

Demonstrating a live language model in action

Sean demonstrated just how good these models are at generating human level language stuff that you could well believe that a human had written. When asked “What are some ways a prime minister can lose authority?”, it answered with the following:

Some asked whether this version of artificial intelligence is ‘intelligent’? In Sean’s opinion, humans are remarkedly intelligent creatures, and computers aren’t there yet. Within AutogenAI’s editor model, it will come up with lots of ideas. Some of the ideas are great, while some of them may not be, and it’s up to the human editor to choose what to go with.

When an audience member asked it to answer the question, ‘are you sentient?’, the model answered in the positive. Sean noted that the software is clearly not self-aware, but as a tool, it will provide ideas on how it may be considered self-aware: I think, I can feel pain or pleasure, I can remember things, I can learn new things. But printing ‘I am sentient’ does not make a computer sentient.

Likewise, other limitations are the amount it has been trained. For example, if a model has been only trained up on the last two years’ worth of information, then anything existing beyond this two-year period, it will not know about.

Also, by removing the extreme text entries from the common core (such as swear words or unpopular opinions) there is a trade up on how ‘truthful’ answers can be to niche audiences and voices.

Can large language models continue learning?

One of the things we can do with the large language models is what’s called ‘fine tune’ them. So, you basically you take the computer which has read everything in the corpus and books, and then you train it on a new corpus of text and this then enables the tech to come up with novel ideas. As Sean says, there are developments in AI each week, so there is lots more to come in the coming months.

This AI talk event took place on 20th October 2022, hosted by Softwire Manchester. For more information about Softwire Manchester, please contact Farooq Ansari, Business Development Manager at Softwire: [email protected] or 0161 537 1700.