Modelling and forecasting forms an important part of numerous industries, from financial services to urban planning, and from flood prevention to climate change forecasting.

What will happen to a financial asset in the event of world temperatures rising by 1.5°C? What will be the result of a 5cm increase in sea levels on a property portfolio?

Modelling enables analysts to answer questions such as these, and ultimately help people make more-informed decisions. The models used are typically sophisticated, and created by individuals or teams with deep knowledge of their domain, but limited technical experience. As such, they’ll typically build their models using spreadsheets.

The age-old spreadsheet challenge

While spreadsheets enable analysts to create relatively comprehensive models, building them in this way gives rise to challenges that will eventually require attention.

We’ve recently been involved in some projects where the customer has asked us to address some of these challenges. Given the growing desire for this type of modelling in the market, we thought it would be valuable to share some of the issues we’re seeing, and outline ways to help address them.

Challenges with sophisticated spreadsheet-based models

Spreadsheets used for modelling are invariably complex. In many cases, they’re only usable by the person that created them or, at best, other modelling specialists. This constrained user base limits the wider usefulness of the models. Wouldn’t it be better, for example, if decision-makers themselves could access curated outputs from the models in a controlled way, rather than needing specialist input every time?

Spreadsheets can also be fragile. An inadvertent change to the formula in a cell on one tab can have significant impacts elsewhere – sometimes without anyone noticing. Add to this the fact that spreadsheets can be copied and changed with relative ease, and you end up with situations where different people are all using slightly different versions of what they believe is the same model, with all the risks that entails. And that’s before you add in the complexity of version-controlling the source data being used in each of these spreadsheets.

Moreover, spreadsheets can struggle when dealing with very large data volumes, and applying the levels of security required when working with any kind of sensitive data is at best difficult and at worst impossible.

How to productionise your spreadsheet-based models

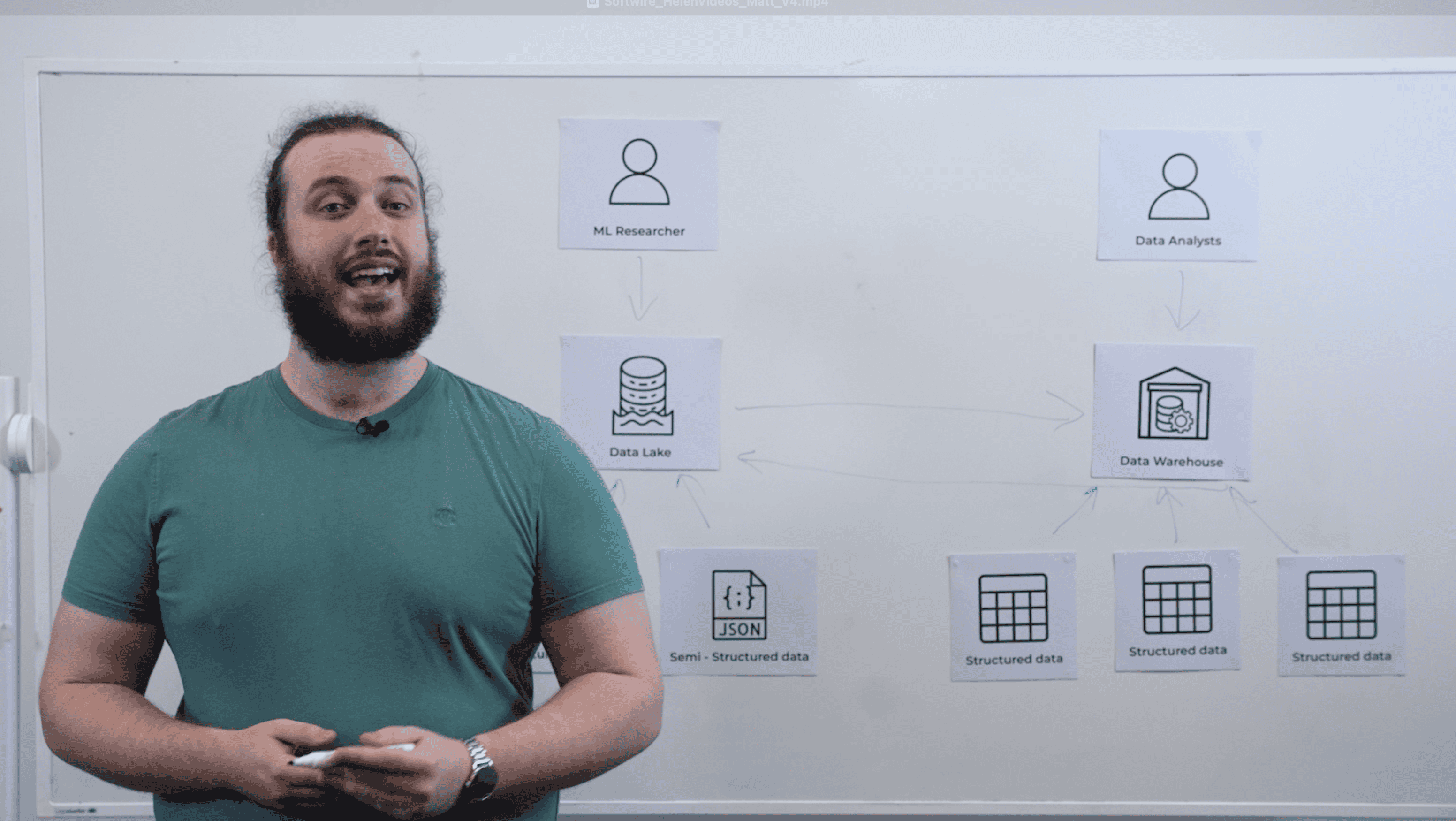

So how do you get away from using spreadsheet-based models, and instead create enterprise-grade tooling, when the people who produce the models aren’t technology experts, and the technologists aren’t domain specialists?

While it may sound counter-intuitive, we’ve found the best approach is not necessarily to remove spreadsheet-based models from the equation entirely. Instead, modellers can continue to create these spreadsheets, which then become the basis for the technical team to build out production-grade versions.

Close collaboration between the modellers and the technical team throughout the process is essential, as is comprehensive testing to ensure the productionised models produce the expected outputs.

From a tech delivery perspective, this requires full-stack development capability, with a particularly focus on secure and scalable infrastructure expertise. Teams need to use robust processes to ensure high-quality code.

Scalability

Scalability must be a core consideration, in various regards. With the expectation that your productionised models will be used by a wider audience than their spreadsheet-based predecessors, the platform must be capable of supporting more users and larger datasets, while delivering the necessary levels of performance.

The cloud is your friend here, with serverless, containers and big data storage and manipulation tools enabling you to create performant, efficient data platforms. Spot compute instances, offered by the major cloud providers, are a good way to keep processing costs down as these often-fault-tolerant workloads grow very large.

User experience design

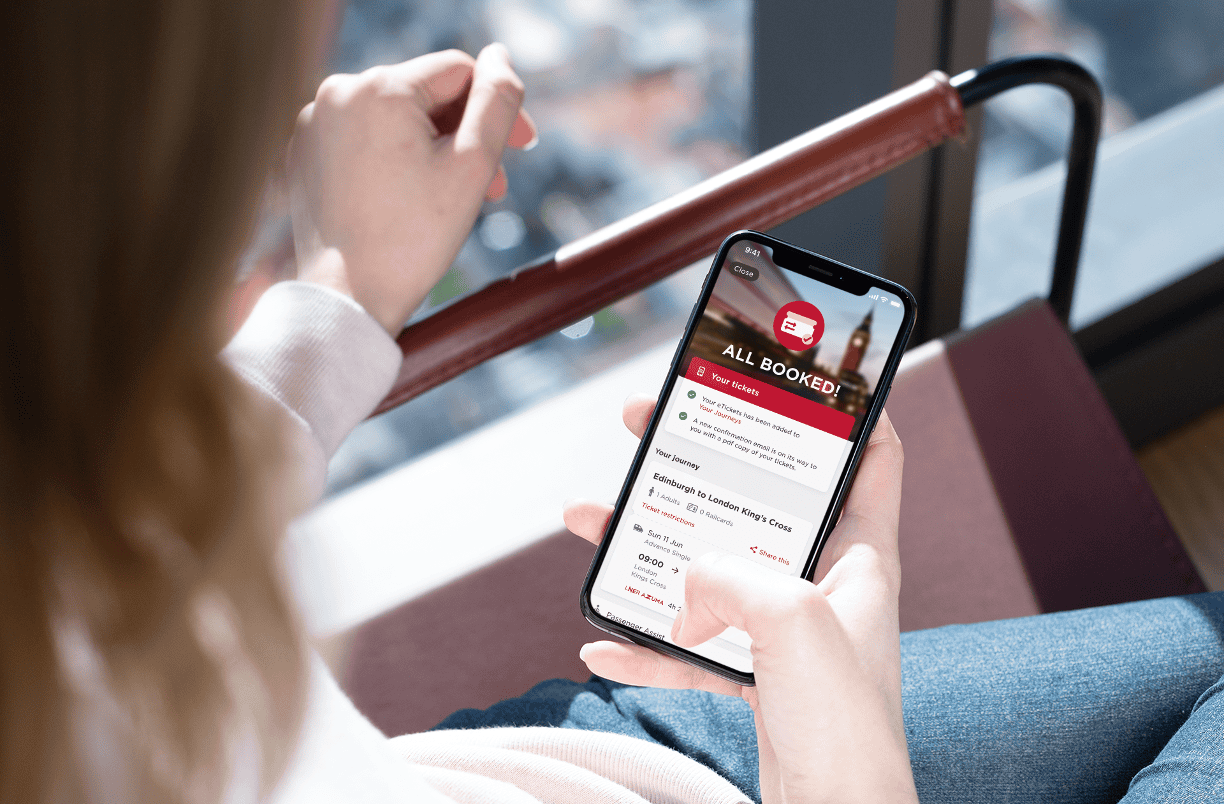

With the goal being that the modelling system be usable by a wider audience, you’ll want a user experience that’s more intuitive than what someone might create in a spreadsheet. While these systems are by their nature complex and likely to require specialist knowledge, you want to minimise the learning curve. Design the user experience in conjunction with your target users, to ensure they can do what they need to, without requiring external support.

The cloud can again be your ally here, with business intelligence and analytics tooling available to create high-performance dashboards that non-technical decision-makers can use to explore curated data intuitively.

Securing your data

Depending on the nature of the work you’re doing, the information your models are ingesting or producing might be sensitive. The models themselves may be, as well. This means you’ll require appropriate safeguards around them.

Security therefore needs to be part of your delivery process from the beginning. Here again, the cloud can be your friend, with its variety of tooling to implement access controls, multi-factor authentication and full audit capabilities with ease. You may also want to explore the ringfencing of data and environments, to tightly control which users can see which information, and prevent data from being copied deliberately or inadvertently.

Delivering greater impact

We’ve found this approach of having a technical team collaborating closely with domain experts, to turn their spreadsheet-based models into enterprise-class software, can quickly produce excellent results.

And by replacing those spreadsheets with something significantly more stable, scalable, secure and user-friendly, you’ll enable your skilled modelling team to massively expand their impact, for you and your customers.

If you’re looking for help with turning your complex spreadsheets into enterprise class tools, you’ve come to the right place. Take a look at how we can do this for you.